Review written by Andy Jones (COS, G3)

In many countries, cameras are nearly ubiquitous in public society. When you go to public places — such as stoplights, stores, or hospitals — a photograph is often taken of you.

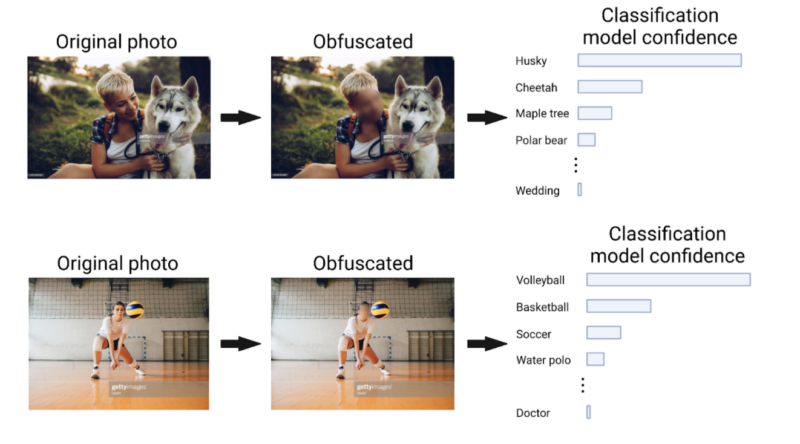

When these photographs are collected into large datasets, they can be useful for developing machine learning (ML) solutions to real-world problems. For example, automated analysis of stoplight photographs could improve traffic flow, and examining customer behavior patterns in clothing stores could improve the shopping experience. However, a large fraction of these photographs contain personal identifying information, such as faces, addresses, or credit card numbers. These photos prompt concerns about the privacy of the individuals identified in them. Thus, at first glance there appears to be a tradeoff between using large datasets of images to train ML algorithms and protecting people’s privacy. But what if the people in these images could somehow be anonymized to protect their privacy, while the images could still be used to build useful ML models?

Continue reading