Review written by Sara Camilli (QCB) and Adelaide Minerva (PNI)

As we go about our daily lives, we often do not consciously think about all the real-world landmarks that we use to position ourselves in space. Yet, as we walk to our local coffee shop or go for a jog in the park, our brain is continuously updating its internal representation of our location, which is critical to our ability to navigate the world. However, we also know that humans and a number of animals can update this internal representation of their position in space even in the absence of external cues. This phenomenon, known as path integration, involves interaction between the parietal cortex, medial entorhinal cortex (MEC), and hippocampus regions of the brain. Prior work has shown that grid cells in the MEC have firing fields that are arrayed in a hexagonal lattice, tiling an environment. Further, there is evidence of inputs to the MEC that encode the velocity at which an animal is moving, which can be used to update the animal’s internal representation of its position. Together, these features support a role of the MEC in path integration.

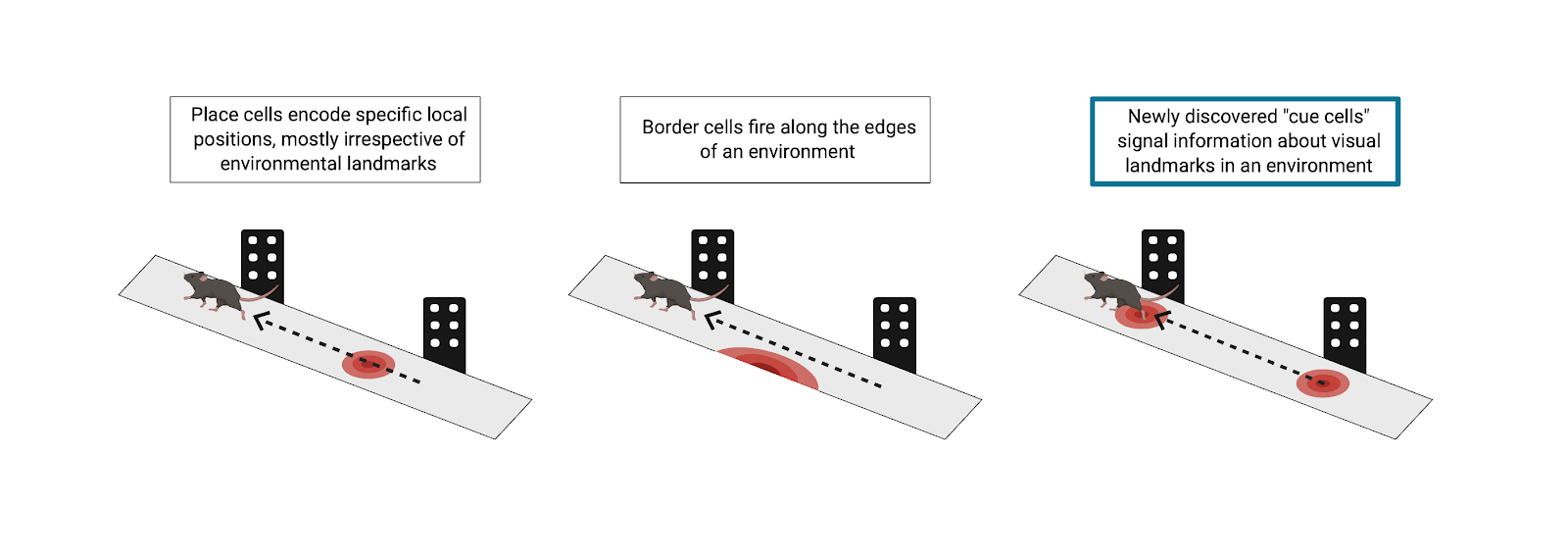

As with many systems in the brain, path integration tends to be estimate-based, and thus error-prone. Border cells in the MEC can account for some error correction in simple environments by firing in response to the borders in an environment, but researchers at Princeton, led by Principal Investigator David Tank, hypothesized that there had to be more to navigational error correction than border cells. The world is complex and full of diverse objects at varying locations. Border cells alone cannot account for how the brain is able to integrate all input from landmarks and supply grid cells with information for error correction, getting you where you need to go without running into obstacles. In a recent publication by Kinkhabwala et al., the Princeton team describes a novel class of neurons, which they term “cue cells.” These cue cells are key to the continual error correction process in path integration through encoding of complex physical landmarks.

The team tested this hypothesis using virtual reality (VR) and advanced neural recording tools in mice. This technique allowed Kinkhabwala et al. to completely control the visual input that the subjects received. In order to understand what the researchers did, one can imagine a mouse running down a VR track. As it runs, it passes various virtual objects, some on the left and some on the right. This, of course, emulates what a mouse might truly experience in the real world, running through a sensory-rich forest. As the mice navigated virtual environments, the researchers used tetrode recordings to measure electrophysiological activity of neurons in the MEC. What was exciting in these experiments was the response of a previously unclassified fraction of neurons in the MEC. As the mouse ran on the virtual track, a subpopulation of these cells fired only when the mouse passed an object. To further classify these neurons, Kinkhabwala et al. calculated each cell’s “cue score,” a measure of the cell’s firing rate in relation to virtual visual landmarks in the environment. Approximately 13% of all recorded neurons in the MEC had a cue score above a predetermined threshold and were defined as cue cells.

After identifying cue cells in the MEC, the researchers sought to relate these neurons to previously defined spatial cell types in this region, including grid cells, border cells, and head direction cells. To do this, Kinkhabwala et al. collected tetrode recordings from the same MEC neurons as animals explored a real, non-VR, 2D arena. They then calculated grid, border, and head direction scores for all cells (both cue and non-cue cells). The majority (63%) of cue cells showed more complex and less predictable firing patterns than those of previously defined cell types and did not co-present as another spatial cell type. Further, these uncharacterized activity patterns remained stable across exploration of the arena, suggesting that they indeed encoded some unique feature of the environment and did not just fire randomly. However, it should be noted that a proportion of MEC cells did co-present as more than one spatial cell type. Such “mixed selectivity”’ may be useful during path integration to weigh accumulating sensory evidence differently based on its reliability in various real-world environments. The extent to which the brain uses this “mixed selectivity” in different navigational contexts remains an open research question.

Once the researchers classified cue cells as unique from other spatial cell types, they went on to characterize other features of these neurons. Examining activity patterns along the VR track more closely, the team noticed that cue cells fired in sequence as the animal approached a landmark. In addition, they found that when mice passed an object on their left, a group of these cells fired in sequence, whereas when mice passed an object on their right, a different group of these cells fired in sequence. Simply having a “left” and “right” group of cue cells is a simplification, but these findings indicate that identified cue cells were, in fact, largely divided into left and right landmark-preferring groups. Further, cue cells remained consistent in their representations across environments.

Kinkhabwala et al.’s results reveal previously unidentified cue cells in the MEC. These specialized neurons provide landmark positional information needed for path integration error correction that other spatial cell types alone do not provide. After all, navigation through the complex and object-rich real world is no simple feat. Future studies might investigate the sensory specificity of these cue cells to various landmarks. For example, the stimuli presented in these studies were purely visual, but do cue cells also encode multimodal landmarks? In addition, do these neurons preferentially encode emotionally salient objects in the environment? Through their groundbreaking research, Kinkhabwala and the team at Princeton have brought us one step closer to answering these questions and understanding how the brain accurately estimates position during spatial navigation.

This original article was published by eLife on March 9, 2020. Please follow this link to view the full version of the article.