Review written by Aishwarya Mandyam (COS, G1)

The prevalence of self-driving or partially self-driving cars is increasingly within our sights. However, before these vehicles can make their mainstream debut, it’s important that consumers are ensured of their safety. In particular, these cars should be able to “see” and “act” like human drivers. As such, a self-driving car’s collision and error detection system must effectively reason about what it cannot see. A big concern with self-driving cars is the question of safety; we expect that a human driver has sufficient intuition to avoid pedestrians and stationary objects. Naturally, we would expect self-driving cars to exhibit the same level of caution for avoiding collisions. In a new paper out of Professor Felix Heide’s group in the Princeton COS department in collaboration with Mercedes Benz, Scheiner et al. introduce a method that uses Doppler radar to enable cars to see around corners. Scheiner et al.’s method to track hidden objects allows for a more effective error detection method that can make these vehicles better suited to operating in the real world where safety is a primary concern.

Currently, hidden objects that are not in direct line of sight (LOS) can cause challenges for a self-driving car because it can’t anticipate these objects’ movements– which may lead to a collision. These (moving) objects include vulnerable road users like cyclists and pedestrians who are not always in sight and can be hidden around corners. Existing non-line-of-sight (NLOS) object identification methods use indirect reflections, or a measurement of a faint signal to identify hidden objects. However, these measurements are often so faint that they can be written off as noise. Noisy measurements are typically ignored. For example, if a measurement corresponding to a real hidden object is considered noisy, or very similar to “background measurements”, by a self-driving car, then the car may not anticipate the object’s movements. Unexpected movements can result in a collision. Even the most advanced NLOS techniques, those that send out short pulses of light and measure the time the light takes to reflect, only work successfully in highly monitored experimental environments [1, 2]. Other non-NLOS sensing methods, for example those that use techniques in computer vision, cannot see around corners and as such are only able to analyze what is directly visible to the sensors. All of these methods are also generally unsuitable for real life scenarios in which the car is traveling at high speeds in a large outdoor space.

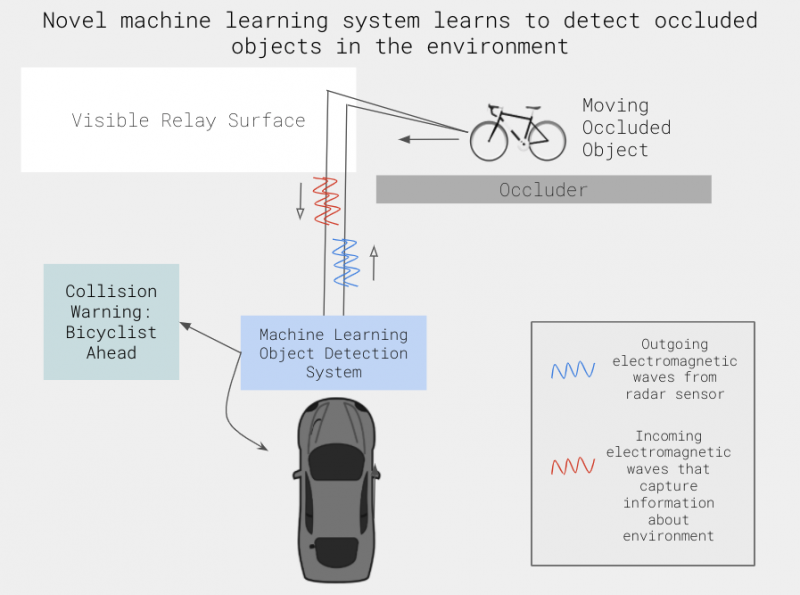

Instead of using visible light, Scheiner et al.’s method uses radar sensors that emit microwave electromagnetic radiation. These waves can identify targets based on the scattering behavior that a wave produces when it hits a target. There are two types of scattering behavior that the authors use to estimate an object’s velocity, range, and angle: diffuse and specular. Diffuse responses capture information about an object’s trajectory at angles greater than 90 degrees between the wall and the object’s direction of motion. Surfaces that are flat relative to the wavelength will have a specular response, like a mirror; the angle of incidence is the same as the angle of reflection. The authors use a combination of the specular response and the diffuse response to effectively identify and track an otherwise occluded object regardless of whether it is still or moving.

The authors propose a neural network to detect and track hidden objects from radar data. A radar sensor combines signals across locations to form a Bird’s Eye View point cloud representation. A point cloud can represent an object or space as a collection of points in the X, Y and Z coordinates. A neural network is a mathematical function roughly inspired by biological neural networks that uses a connected set of “neurons” to process data and learn some outcome. The connections between these neurons can be learned through a process called training. The goal of training is to predict a certain outcome; the network knows how close it is to this outcome because it is supplied with “ground truth” data. The goal of Scheiner et al.’s neural network is to (1) convert a point cloud representation of a scene into an image of that scene, (2) generate a 2-D bounding box to detect an object, and (3) track the object to predict its future location.

To train their network, the authors capture “in-the-wild” automotive scenes using a set of radar sensors mounted to a prototype car. The scenes also include pedestrians and cyclists that are equipped with their own tracking systems so that a network can learn the ground truth location of these vulnerable users.

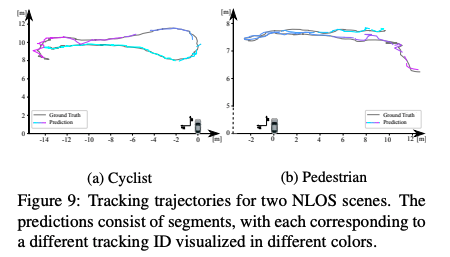

Scheiner et al. use a combination of qualitative and quantitative metrics to assess this network. Qualitatively, the authors note that the network reliably predicts the location and trajectory of occluded cyclists and pedestrians hidden behind different types of walls. Then, they report detection and tracking accuracy for cyclists and pedestrians on their testing set. They quantify error in tracking by using the predicted and ground truth object trajectories as seen in Figure 9.

The authors find that their method to detect and track occluded objects results in higher detection classification accuracy for pedestrians and cyclists than benchmark methods like SSD (Single Shot Multibox Detector) [3]–a method that generates bounding boxes for objects, and PointPillars [4]–a method that detects objects from point clouds. Additionally, the method has minimal error, high localization, and high tracking accuracy on both NLOS and LOS data. The authors conclude that this strategy can be effectively used as a pedestrian and bicyclist collision warning system on real life data.

In this recent paper, Scheiner et al. introduce a novel method that uses Doppler radar to detect and track NLOS, occluded objects. This work, in combination with future endeavors to integrate with existing sensor systems, can enable efficient, cheaper sensing systems that increase consumer trust in the safety of self-driving vehicles.

Professor Felix Heide, lead author of this study, explains “We are super excited about bringing this technology to consumer vehicles next. In contrast to costly lidar sensors, the radar sensors we used are low-cost and ready for series production, and so we see this as a practical step towards letting the vehicles of tomorrow see around street corners.”

This original article was published for the Conference on Computer Vision and Pattern Recognition in June 2020. Please follow this link to view the full version.

References

[1] C. Saunders, J. Murray-Bruce, and V. K. Goyal. Computational periscopy with an ordinary digital camera. Nature, 565(7740):472, 2019.

[2] K. L. Bouman, V. Ye, A. B. Yedidia, F. Durand, G. W. Wornell, A. Torralba, and W. T. Freeman. Turning corners into cameras: Principles and methods. In IEEE International Conference on Computer Vision (ICCV), pages 2289–2297, 2017.

[3] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollar. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision, pages 2980– 2988, 2017.

[4] A. H. Lang, S. Vora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom. PointPillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 12697– 12705, 2019.