Review written by Andy Jones (COS, GS)

Understanding the link between neural activity and behavior is one of the long-running goals of neuroscience. In the information age, it is becoming more and more common for neuroscientists to take a data-driven approach to studying animal behavior in order to gain insight into the brain. Under this approach, scientists collect hours’ or days’ worth of video recordings of an animal, relying on modern machine learning (ML) systems to automatically identify exact locations of body parts and classify behavior types. These methods have opened the door for more expansive studies of the relationship between brain activity and behavior, without relying on laborious manual annotations of animal movements.

However, these ML systems are typically only able to analyze an environment that has just one animal in it. Studying the interactions between animals is crucial to studying behavioral patterns as a whole—and eventually gaining insight into the neural signals underpinning these behaviors.

To address this limitation, researchers across Princeton departments — including Neuroscience, Ecology and Evolutionary Biology, and Physics, and led by graduate student Talmo Pereira — have developed a new computational tool that can track the movements of multiple interacting animals simultaneously. Their framework—Social LEAP Estimates Animal Poses (SLEAP)—extends current state-of-the-art deep learning-based pose-tracking systems to handle the challenges that arise from having multiple animals in the same video frame. These challenges include animals occluding one another in the video and handling animals at different depths.

Tracking animal motion is often cast as a problem of “pose estimation”, or the identification of the spatial coordinates of an animal’s body parts. For a given video frame, estimating an animal’s pose first requires identifying a small set of anatomical “landmarks” of the animal. For example, the animal’s neck might be used as an anchor. Using these landmarks, the system must then identify and label the coordinates of the various animal parts — legs, torso, head, etc. Finally, it must be able to keep track of the smooth transitions of the animal’s pose across time through sequential video frames.

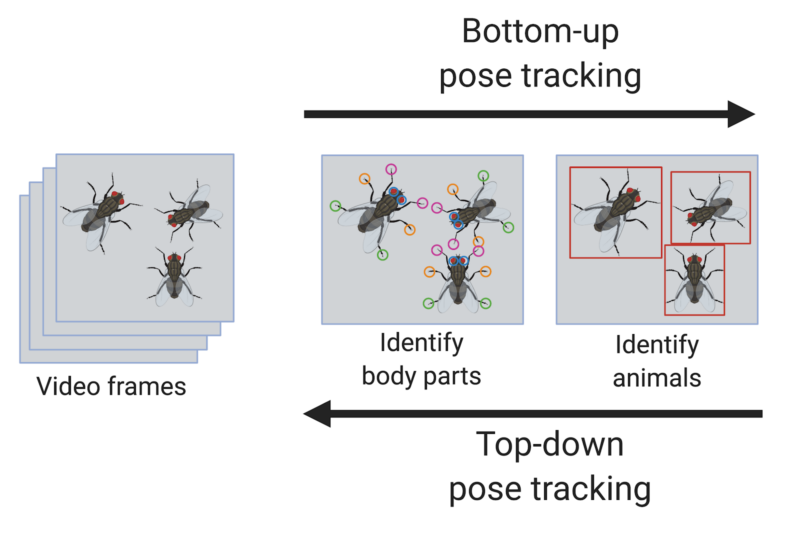

The few previous automated, multi-animal tracking systems typically employed one of two frameworks: a top-down strategy or a bottom-up strategy. In the top-down approach, the whole animals are first identified, and then their constituent body parts are labeled. In the bottom-up approach, the individual body parts are first identified, and then these parts are grouped and assigned to different animals. SLEAP is novel in that it allows for running both of these approaches within the same framework, letting the user decide whether top-down or bottom-up is more appropriate for their experiment.

After developing their algorithm, the researchers applied SLEAP to video recordings of three different animal models: bees, mice, and flies. Given the predicted locations of each body part, they computed the error with respect to the true location (as determined by manual scoring). They found that SLEAP achieved high accuracy in identifying the body parts for all three animal models. Specifically, they observed around 1 pixel of error for most of the fly body parts, around 4 pixels of error for most mouse parts, and around 5 pixels of error for most bee parts.

Interestingly, they found that the bottom-up approach and the top-down approach had differing levels of accuracy depending on the dataset. For example, they found that the top-down approach worked best for the flies datasets, possibly due to the small body parts of the fly that require precise, fine annotations. On the other hand, they found that the bottom-up approach worked best for the mice dataset. Since mice have larger and more coarse-grained features, the authors hypothesized that the bottom-up approach helped identify the global positions of the body parts better in this case.

To promote accessibility and allow for other researchers to easily use SLEAP on their own datasets, the researchers built a user-friendly graphical user interface (GUI) that lets scientists view the predicted poses and proofread them as needed.

While SLEAP is ready-to-use in a lab setting in its current form, the researchers envision several next steps to enhance their framework. In particular, one possible future direction is to incorporate three-dimensional information from multiple camera views, which could give an even richer picture of animal behavior. In addition, there are several applications in neuroscience that the authors hope to explore.

“We have been applying SLEAP to a large dataset of socially interacting fruit flies engaged in their courtship ritual. By tracking their precise movements, we can reconstruct what each animal is perceiving from an egocentric frame and how they’re dynamically adjusting their behavioral response. […] We’re looking now to build models of how neural computations in the fly brain can generate the movements we are able to quantify with SLEAP,” said Pereira.

With its high-accuracy annotation of multi-animal video recordings, SLEAP could pave the way for studying the link between neural activity and complex social behaviors. In particular, in behavioral studies that collect neural activity data and video recordings simultaneously, SLEAP could be an integral tool in the path toward identifying which neural patterns are associated with certain behaviors.

This original article was uploaded to bioRxiv on September 2, 2020. Please follow this link to view the full version.