Written by Anika Maskara ‘23 & Thiago Tarraf Varella (PSY GS)

It is common in popular culture to imagine human decision making as a clash of two distinct choices. There is a “good option” and a “bad option,” an angel or a devil sitting on our shoulders. Like many dichotomies, though, that view of decision making is misleading. It is true that research suggests we have two different decision-making systems that sometimes disagree about which action to take, but neither is better or worse than the other; they simply use different algorithms to help us decide what to do.

One—the habitual or model-free system—depends on automatic reactions based on well honed previous experiences while the other—the goal-directed or model-based system—is based in deliberation. In a recent Primer in Current Biology, PhD student Nicole Drummond and Principal Investigator Yael Niv, from Princeton University, explain that these systems often work in tandem to ensure we make the best decision.

The idea of two different types of decision making is rooted in the distinction between habitual and goal-directed behavior. Take, for example, an experiment where a rat is trained to press a lever in order to receive a piece of food. If the rat is demonstrating habitual behavior, it will continue to press no matter how hungry or full it is. It has associated pressing the lever with a positive outcome, and only retraining the rat to associate pressing the lever with different, perhaps negative, outcomes will change its behavior. On the other hand, if the rat is exhibiting goal-directed behavior, its rate of pressing will decrease if it’s made to feel full. In this case, the amount of previous experience with the outcomes of lever-pressing has not yet reached the point where the rat stops deliberating whether pressing is worth it or not.

Research done under such an experimental set-up has revealed that rats can exhibit both types of behavior. In cases where rats are trained extensively, they tend to continue to press the lever even after they are made to feel full—exhibiting habitual behavior. But when the rats are only trained moderately, their rates of lever pressing decrease after they are sated, exhibiting goal-directed behavior. Repetition, it seems, fosters greater reliance on a habitual system, an idea that carries over to humans as well; there is a reason, growing up, our parents encourage us to say “please” and “thank you” over and over. With enough repetition, good behavior becomes a good habit.

The differences between the habitual and goal-directed systems that influence behavior can also be thought of in terms of two different decision making systems in machine learning theory: model-free and model-based reinforcement learning, respectively. Generally, reinforcement learning describes how a learning agent makes choices in its environment based on the probability of positive outcomes associated with certain actions.

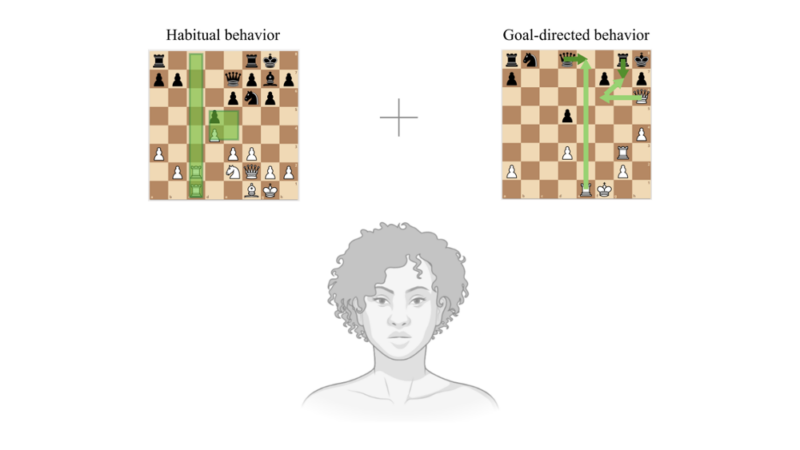

In model-free reinforcement learning, the future reward values of actions are learned directly through trial and error. An agent will make a decision, find out the outcome, and update the value of that action accordingly (i.e., positive outcomes will increase the value of that action, and negative outcomes will decrease the value). Over time and after much trial and error, the cached values of actions stored by the agent will begin to approach the actual expected value of taking certain actions. All of this sounds a bit abstract, but think, for example, of someone playing a game of chess. In the middle of the game, there are many possible directions the game could go in. So, when deciding what piece to move and where, it is unrealistic for even the most experienced player to predict all long run future outcomes based on that one move. There are too many variables at play. Thus, experienced chess players rely on well-honed values for different board configurations—like the value of gaining control of the center of the board—corresponding to model-free decision making.

In contrast, model-based reinforcement learning, corresponding to goal-directed behavior, involves mentally mapping a representational model of the world and simulating actions within it. To take any particular action, the agent simulates paths through that model and makes choices that would yield the best outcome. This is similar to what happens by the end of a chess game, where the possibilities are more limited and actions are more consequential. Players report planning all relevant sequences of actions, corresponding to model-based decision making.

Both systems have distinct advantages and disadvantages. Model-free decision making is faster and less computationally intensive; but it is also less adaptable to unfamiliar situations since it only updates actions values through trial and error. Model-based decision making may be more flexible, but it can also be inaccurate when there are too many variables and possibilities. Because of these differences, deciding which brain system to use for which decision can be a challenge in itself. However, the brain seems to solve this rather optimally—using each system in situations it is best suited for. As experiments have shown, in cases where the subject has had a lot of experience with the situation, for example, model-free thinking tends to take over since the subject can be more confident in its previous decisions.

Drummond and Niv combine experimental evidence and computational analysis to suggest that the brain will favor whatever decision making system is more confident in its predictions in a given situation. Taking this back to the chess example, long run predictions made by the model-based system in the middle of the game are inherently noisy—every step in the mentally stimulated path introduces more uncertainty—so it makes sense the model-free system would be favored in this case. On the other hand, during endgame, simulating possible outcomes becomes a better strategy because the number of possible actions is lower. This allows for a more confident decision through a model-based system. Furthermore, the two systems can even work together. Stored values from model-free thinking can help prune away certain decisions that initially lead to obvious bad outcomes without the need to actually simulate what would happen next.

Clearly, human decision making is a lot more complicated than a single dichotomy. Arbitrating between the systems is not a moral battle between good and evil; it can be thought of as a complex optimization process, where the agent is always trying to do what is best under the constraints of limited resources. False dichotomies, such as the idea that making decisions through habit is not smart or that habits and planning do not work together, are not new to psychology or even to science more generally. Breaking these misconceptions is a critical step towards building a precise understanding of our own nature.

Yael Niv, a Professor of Psychology and Neuroscience and last author of this Primer, explained that this piece of research fits into a broader context of applications in psychotherapy: “you might imagine that what is called ‘cognitive restructuring’ in cognitive behavioral therapy would affect model-based actions more, whereas experiential learning as happens through exposures in cognitive behavioral therapy would affect model-free values”. Her lab will soon publish a book chapter called “Precision Psychiatry” discussing implications of what we know about reinforcement learning to cognitive behavioral therapy.

This Primer was published in Current Biology on August 3, 2020. Please follow this link to view the full version.