Review written by Renee Waters (PSY)

Humans tend to make individual choices based on a series of past experiences, decisions, and outcomes. Just think about the last time you had some terrible take out: you might decide not to eat at that particular restaurant again based on your previous experience. Maybe, you take the same route to work every day because, in the past, there is less traffic on this particular route. The effects that past experiences have on choices are often termed sequential biases. These biases are present everywhere, especially in value-based decision making. You might wonder, what are the neural mechanisms driving this phenomenon? Christine Constantinople, a former postdoc at Princeton University and now an assistant professor at NYU, began to explore this question along with colleagues in the Brody Lab at Princeton.

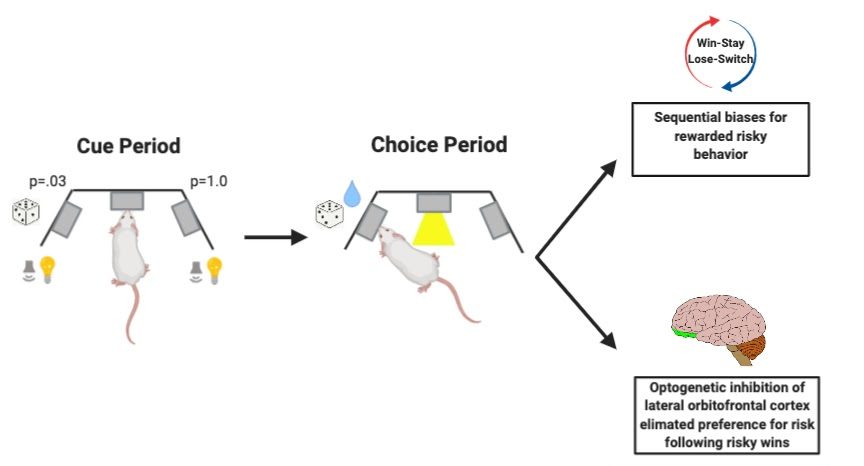

In a recent paper, Constantinople et al. used animal models to explore which brain regions are involved in this type of biased decision-making. The researchers developed and trained rats in a novel task in which rats chose between explicitly cued, guaranteed rewards and risky, probabilistic rewards. This task involved a 3 port set-up in which the ports on one side of the center had guaranteed water rewards with consistent cues such as flashing lights and auditory tones, and the other side had probabilistic reward probability and inconsistent visual and auditory cues. The guaranteed and probabilistic cues and outcomes were randomly assigned to each side, varying in each trial. Additionally, the volume of the water rewarded varied and was chosen independently and randomly. Following several months of training, researchers determined if the meaning of the cues in the task were sufficiently understood by rats, by comparing the mean expected value, which is the probability multiplied by reward. Well-trained rats tended to choose the option with the higher expected value, meaning that on trials when both sides offered a reward, rats chose the larger reward, and when one side offered no reward, they chose the other side.

Constantinople and colleagues found that rats exhibited sequential biases just like humans and primates. If rats chose the risky, probabilistic option and were rewarded, they would gamble again and choose that side on the next trial. This behavior indicates the presence of a win-stay bias versus a loose-switch bias. Additionally, since the risky and safe port switched on each trial, the risky win-stay bias was independent of side presentation: rats didn’t show a risky win-stay bias because it coincided with side bias (e.g., preferring left vs. right). This indicates that sequential biases in rodents are expressed in non-spatial task-dependent ways, similarly to humans. Now that researchers had successfully modeled this behavior in rodents, they wondered, how might the brain represent non-spatial sequential biases?

Previous research pointed them to the lateral orbitofrontal cortex (lOFC), a brain region that has been shown to be important for flexible behavior under dynamic task contingencies. For example, lesion experiments have implicated lOFC in tracking rewards and other evaluative processes, such that lesions to this region produce impairments in these behaviors. However, while studies demonstrate that the OFC is involved in behavioral flexibility, most of these studies have used only tasks in which trial-by-trial learning improves behavioral performance. Constantinople et al. aimed to explore whether lOFC’s role in behavioral flexibility drives sequential biases even when these biases result in suboptimal or deleterious choices/outcomes.

To do this, researchers recorded from neurons in the lOFC of rats while they performed the aforementioned task. They found that lOFC neurons had transient responses at the beginning of each trial, and the magnitude of the response positively represented whether the previous trial had been rewarded or not. Constantinople and colleagues predicted that behavior driven by sequential biases may require the activity of populations of neurons, so they simultaneously recorded from multiple neurons during this same task. To analyze population data, they used tensor components analysis (TCA). TCA is an extension of more commonly used principal components analysis and serves to reduce the dimensionality of complex, multi-neuron data into a simpler form for analysis of trial-to-trial activity. The research team used TCA to extract information about neuron factors (neuron activity weights), temporal factors (time-varying dynamics within trials), and trial factors (dynamics across trials). They found that trial factors were significantly regulated by the reward history of the past two trials and, after receiving a reward, lOFC activity overall decreased on the following trial. Altogether, these data suggest that lOFC activity represents reward history, most notably during the cue presentation.

Next, the team investigated what would happen to behavior/learning if they disrupted these dynamics during the cue period. They predicted that disrupting activity would negatively affect trial-by-trial learning. Constantinople et al. used optogenetics to inhibit lOFC activity during the cue period. Surprisingly, they found that spatial win-stay or lose-switch biases were not affected by inhibition during the cue period. However, contrary to the cue period, lOFC activity at the time of the choice reflected whether rats chose the safe or risky option. The researchers saw that different subsets of neurons showed differential activity on rewarded trials when rats chose safe or risky options. Since this activity may require encoding of left/right choices during this period, they next wanted to explore if the spatial win-stay/lose-switch bias (meaning side preference) is affected by inhibition of lOFC neurons. Interestingly, optogenetic inhibition during the choice period eliminated risky win-stay biases during the subsequent trials.

Constantinople et al.’s study supports the role of the OFC in updating risk attitudes and value-based decision making. They found that lOFC reflected reward history most strongly at trial initiation before the animal had any information about the current trial. Constantinople and colleagues' work revealed additional differences between rat and primate OFC in that many neurons were selective for the side the rat decided to poke on, and such selectivity is not seen in primates. Altogether, these data implicate the OFC’s unique role in risky-win stay behavior, in addition to other value-based decision-making processes. Future work on this topic may include investigating the specific subsets of neurons within the OFC that may be responsible for the risky win-stay bias and updating dynamic risk preferences. This work provides great strides in understanding sequential biases and the OFC’s role in adaptive behaviors.

Christine Constantinople, first author of this study and former postdoc in the Brody Lab, explained that the long training times (~6 months) required for the behavioral assay used in these experiments was a major challenge of this work. She noted that "the major resource that made this work possible was a high-throughput behavioral training facility developed in the Brody lab that uses computerized procedures to train hundreds of rats (~300) per day. This facility allowed us to explore different training procedures until we found one that was reasonably efficient, and it also generated dozens of trained rats in parallel (36 in this study)."

This original paper was published by eLife on November 6, 2019. Please follow this link to view the full version.