Review written by Eleni Papadoyannis (PNI)

How do humans control a complex system like the brain? Over the years, neuroscientists have discovered numerous methods to do exactly that. Applying chemicals, such as muscimol, can drive inhibition to shut down a brain region. Alternatively, shining light can selectively activate certain cell types through the photo-sensitive protein channelrhodopsin. Sending electrical impulses via electrodes in deep brain stimulation (DBS) can also control regional activity in humans. Causal manipulation of the brain not only offers incredible insight into hypotheses relating neural activity to behavior, but also serves as a clinical tool. Electrical and magnetic stimulation methods have been used as therapies for treating patients with a variety of diseases and disorders, such as using DBS to control motor disruption in Parkinson’s. A major limitation with many stimulation methods, however, is that the protocol is static while the brain is plastic—over time, brain responses to stimulation may no longer elicit what was intended as the brain naturally changes.

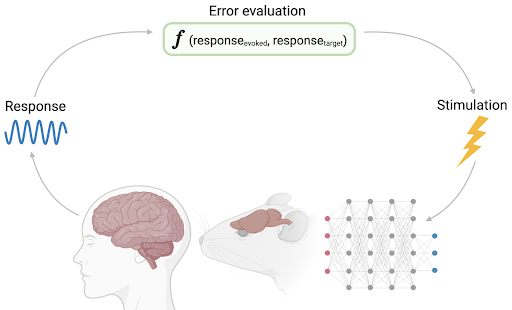

To address this issue, a team led by Tafazoli and MacDowell at the Princeton Neuroscience Institute introduced a novel system called adaptive close-loop pattern stimulation (ACLS). This system not only adaptively stimulates cells over time in order to control activity, but does so precisely for groups of cells (i.e., population-level). The system works iteratively through three steps: 1) observing evoked activity, such as firing rate, 2) determining how well the evoked activity matched the intended response by evaluating a error through a cost function, such as measuring the Euclidean distance, and 3) updating the next stimulation pattern via a machine learning (ML) algorithm to minimize the subsequent error. ACLS was implemented in silico with a deep convolutional neural network (CNN) as well as in vivo in awake mice.

CNNs are a type of neural network: an algorithm designed to receive raw input (such as image pixels) and recognize patterns (such as categorizing the image, click here for an overview). CNNs in particular have been shown to approximate some aspects of visual processing with representations comparable to what is seen in the brain. In this study, ACLS was used to control activity of a target layer within a 5-layer CNN. This network was initially trained to classify a visual numeric dataset of handwritten digits, 0-9. Greyscale images served as visual input to the network. The experimenter first chose an arbitrary activity pattern in the target layer that corresponded to a digit. ACLS started by iteratively presenting randomly generated input images, then measured target layer activity and calculated the cost function. In each block of learning, the “best” input pattern was chosen as the pattern with the minimum cost function value and was then randomly perturbed in the subsequent block until the error value was reduced or reached a floor. Over time, ACLS controlled the input pattern to the network so that the activity of the target layer was close to desired activity. In the final block, the CNN was able to correctly categorize input as target images with ~90% classification performance. In other words, ACLS initially knew nothing about how to control the network but learned to create a stimulus image that made the network correctly think it had seen the number 1.

To exercise learning ability, parameters for noise, drift, and dataset complexity were added during training. These variables are key to simulating representational learning as they account for real-world factors that could alter brain activity (such as adaption, movement of an electrode, or internal state changes, like arousal or motivation levels, over time). The learning rate of the CNN was fastest when there were low levels of noise and predictably slowed as noise increased. Different levels of drift (changes in weights) yielded similar results on learning rate. Finally, complexity was tested by training the CNN to categorize a large, alphabetical dataset. As more letters were added, it became more challenging and took ACLS more time to learn to control the network. These results suggest that ACLS can control an elaborate CNN, though more work needs to be done to improve learning rates for increasingly complex systems.

Following its success in simulations, ACLS was then tested in mice acutely implanted with silicon probes in the primary visual cortex. These probes have multiple channels to simultaneously deliver electrical stimulation and record from different populations of neurons. Once implanted, mice passively observed images presented on a screen and their respective responses were catalogued as target response patterns. As seen in the CNN simulations, learning by error minimization brought cortical activity roughly 90% closer to target patterns and occurred rapidly, within ~12 minutes per target, with high selectivity.

To more thoroughly test the physiological relevance of ACLS, the team explored how evoked responses might adapt neural responses of a population. Adaptation is one of many mechanisms by which the brain changes over experience. In the experiment, three stimuli were presented in succession: the first two as visual images and the last as an electrical stimulation. When the second image matched the first (testing visual adaptation), firing rates were reduced, confirming what is commonly described as visual adaptation in the literature. They then tested if the electrical stimulation pattern corresponding to the second image could induce an adaption effect if presented immediately after. Responses to ACLS-learned electrical stimulation patterns were also adapted when preceded by the equivalent visual stimulus, suggesting that ACLS does produce activity physiologically similar to natural responses in the brain.

Taking into account the natural plasticity of the brain and the diversity of experimental approaches in neuroscience, Tafazoli, MacDowell, and colleagues built ACLS as a flexible framework at all three points. Neural responses can be measured from the single neuron level up to regional BOLD signal, and can even be in the form of behavior, such as choice or motor action. Error functions can be alternatively defined, for example by using cosine similarity instead of Euclidean distance. Stimulation can also be delivered optically or magnetically. ACLS avoids the need to model and make assumptions about the network because it only has access to the initial pattern input, and learns via closed-loop by reducing error values. This process of updating is especially powerful for quickly controlling activity despite changes in states, varying noise levels, and the individual differences between each animal and/or patient. In this study, ACLS was only applied in one region, visual cortex, but further studies can expand upon the potential to work across many regions for larger coordination of control.

Ultimately, ACLS has broad applications in both academic and clinical domains. Because this framework can control populations of neurons, new hypotheses that require specific causal directions can now be tested. Furthermore, through its adaptive and online foundation, ACLS can optimize the precision and efficacy of clinical procedures, like DBS, for diseases, such as Parkinson’s, to produce tailored, individual treatments. Approaches like ACLS pave the way for a future of personalized, self-sustaining therapies, allowing us to gain more control in a system as complex as the brain.

Camden MacDowell, a graduate student in the Buschman lab at PNI and co-first author, shared, “we are excited by the results of this project and look forward to extending the use of ACLS to learn to control behavior and rescue function in disease states.”

This original preprint was submitted to bioRxiv on March 16, 2020. Please follow this link to view the full version.